Just days after showing off nearly two dozen Cybercabs running on Full Self-Driving, Tesla finds itself being investigated by the National Highway Traffic Safety Administration. The feds are examining 2.4 million FSD-equipped vehicles due to issues driving in “reduced visibility conditions,” including a fatality.

NHTSA’s investigating four complaints involving crashes of vehicles using Tesla’s Full Self-Driving technology.

NHTSA opened the investigation after four Teslas were involved in crashes while reportedly using Full Self-Driving in settings where visibility was less than ideal. In these crashes, the reduced roadway visibility arose from conditions such as sun glare, fog, or airborne dust. A Tesla vehicle fatally struck a pedestrian in one crash and an injury was reported in another.

This is not the first investigation by NHTSA or other federal regulators into Tesla’s semi-autonomous driving technology. Last year, NHTSA ordered Tesla to perform an over-the-air recall of FSD software to address problems with vehicles acting “unsafe around intersections” and ignoring speed limits.

The company performed a similar OTA for rolling stops at intersections in January 2022. These don’t include the recalls and investigations related to Autopilot, which offers less functionality. It’s also not the first incidents related to the technology.

In April, a Tesla Model S using Full Self-Driving crashed into a 28-year-old motorcyclist near Seattle. The rider died in the collision. It was the second fatality attributed to FSD, plus the one reported in the current round of complaints.

What affected and what’s being investigated

NHTSA’s investigation unit, the Office of Defects Investigation, opened a “Preliminary Evaluation” of FSD, which is available as an option for: 2016-2024 Models S and X, 2017-2024 Model 3, 2020-2024 Model Y, and 2023-2024 Cybertruck.

This Preliminary Evaluation is opened to assess:

- The ability of FSD’s engineering controls to detect and respond appropriately to reduced roadway visibility conditions;

- Whether any other similar FSD crashes have occurred in reduced roadway visibility conditions and, if so, the contributing circumstances for those crashes; and

- Any updates or modifications from Tesla to the FSD system that may affect the performance of FSD in reduced roadway visibility conditions. In particular, this review will assess the timing, purpose, and capabilities of any such updates, as well as Tesla’s assessment of their safety impact.

The investigation is examining vehicles using FSD-Beta and FSD-Supervised, according to the filing. There is no timeframe offered to complete the investigation. A recall cannot be ordered without an investigation.

More Full Self-Driving Stories

- Fed Probe of Tesla Autopilot, FSD Raise Securities, Wire Fraud Concerns

- Tesla Gets Go From China to Launch Full Self-Driving Tech, Sending Stock Soaring

- Tesla Kills Plan for Affordable EV

Tesla’s technology

Tesla CEO Elon Musk has long maintained a camera-only system is the most effective one to achieve full autonomy.

Tesla vehicles equipped with FSD experiencing difficulties in less-than-ideal conditions shouldn’t be a surprise, according to some critics. The system relies solely on cameras, which have several shortcomings. They cannot see through fog, struggle with bright glares and other issues. Most automakers use a combination of cameras, radar and lidar for their semi-autonomous driving technologies.

“Weather conditions can impact the camera’s ability to see things and I think the regulatory environment will certainly weigh in on this,” Jeff Schuster, vice president at GlobalData, told Reuters.

“That could be one of the major roadblocks in what I would call a near-term launch of this technology and these products.”

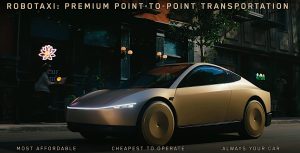

Earlier versions of Autopilot, a precursor to Full Self-Driving, also used radar to provide additional information for the system. However, Tesla CEO Elon Musk remains resolute in his belief that a camera-only-based system is the best option — which he reiterated during the introduction of the Cybercabs last week.

Name one person you would trust at NHTSA over Musk. If they had 1/2 a brain they wouldn’t be working there.

“What do you do for a living?”

“I review Elon Musk’s designs for NHTSA.

“Right!!!!!!!!!!!!!!!”